Tokenization & Lexical Analysis

2 έτη

2 Προβολές

Κατηγορία:

Περιγραφή:

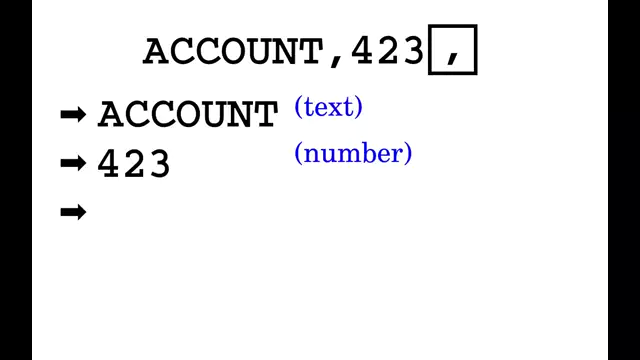

Loading (deserializing) structured input data into computer memory as an implicit chain of tokens in order to prepare subsequent processing, syntactical/semantical analysis, conversion, parsing, translation or execution.